How to Upload to an S3 Bucket

Introduction

Amazon Web Service, aka AWS, is a leading cloud infrastructure provider for storing your servers, applications, databases, networking, domain controllers, and active directories in a widespread cloud architecture. AWS provides a Simple Storage Southwardervice (S3) for storing your objects or data with (119'southward) of data durability. AWS S3 is compliant with PCI-DSS, HIPAA/HITECH, FedRAMP, Eu Information Protection Directive, and FISMA that helps satisfy regulatory requirements.

When yous log in to the AWS portal, navigate to the S3 bucket, cull your required saucepan, and download or upload the files. Doing it manually on the portal is quite a time-consuming task. Instead, you can employ the AWS Command 50ine Interface (CLI) that works best for majority file operations with piece of cake-to-utilize scripts. You can schedule the execution of these scripts for an unattended object download/upload.

Configure AWS CLI

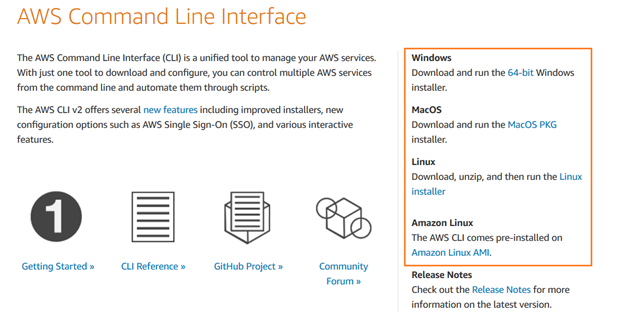

Download the AWS CLI and install AWS Command Line Interface V2 on Windows, macOS, or Linux operating systems.

Yous can follow the installation sorcerer for a quick setup.

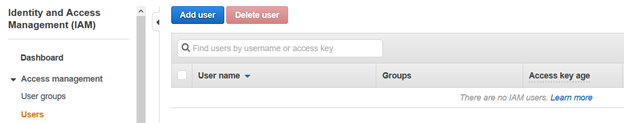

Create an IAM user

To access the AWS S3 bucket using the command line interface, we demand to ready an IAM user. In the AWS portal, navigate to Identity and Admission Management (IAM) and click Add together User .

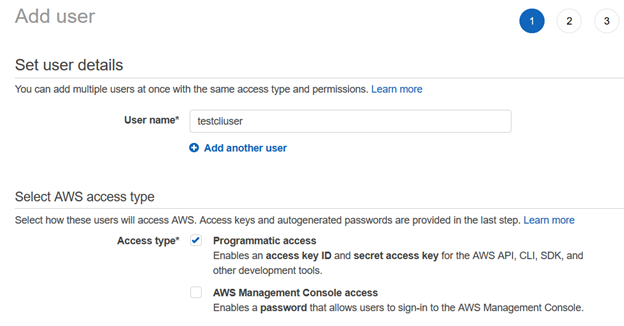

In the Add User page, enter the username and access type as Programmatic access.

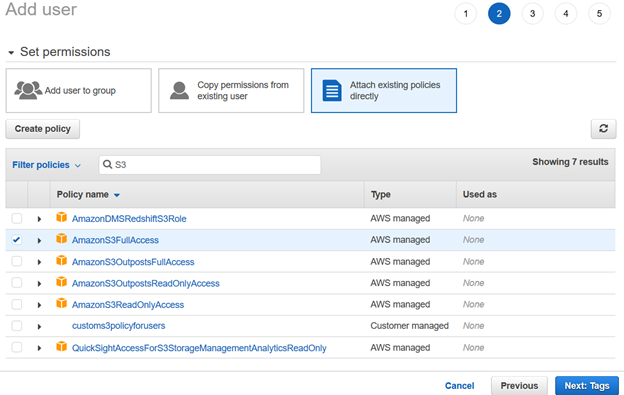

Next, we provide permissions to the IAM user using existing policies. For this article, nosotros have chosen [AmazonS3FullAccess] from the AWS managed policies.

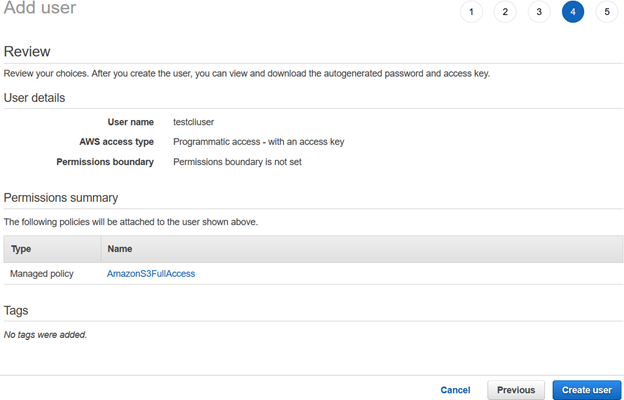

Review your IAM user configuration and click Create user .

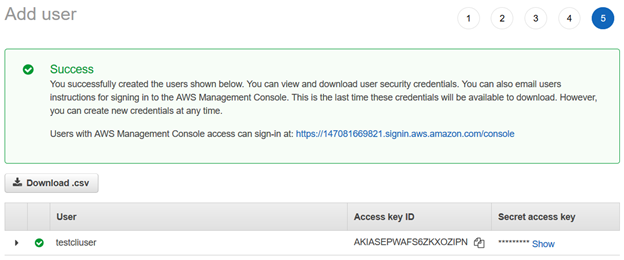

Once the AWS IAM user is created, it gives the Access Cardinal ID and Secret access cardinal to connect using the AWS CLI.

Note : Y'all should copy and salve these credentials. AWS does not allow you to remember them at a later stage.

Configure AWS Contour On Your Computer

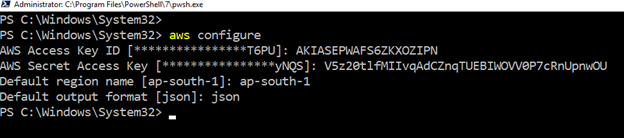

To work with AWS CLI on Amazon web service resources, launch the PowerShell and run the following command.

>aws configure It requires the following user inputs:

- IAM user Access Fundamental ID

- AWS Clandestine Access fundamental

- Default AWS region-name

- Default output format

Create S3 Bucket Using AWS CLI

To store the files or objects, nosotros need an S3 bucket. We can create it using both the AWS portal and AWS CLI.

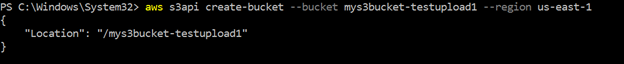

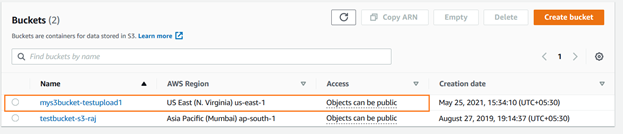

The following CLI command creates a saucepan named [mys3bucket-testupload1] in the us-e-1 region. The query returns the bucket name in the output, as shown below.

>aws s3api create-bucket --saucepan mys3bucket-testupload1 --region u.s.a.-e-i

Yous tin verify the newly-created s3 bucket using the AWS console. As shown below, the [mys3bucket-testupload1] is uploaded in the US East (Due north. Virginia).

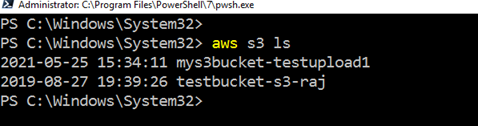

To listing the existing S3 bucket using AWS CLI, run the command – aws s3 ls

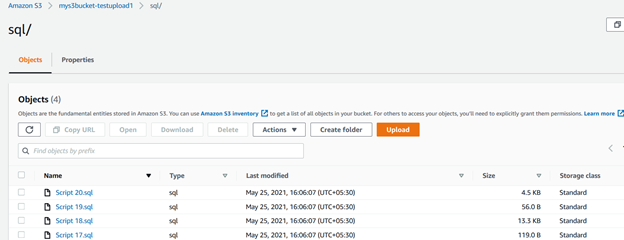

Uploading Objects in the S3 Bucket Using AWS CLI

We tin upload a single file or multiple files together in the AWS S3 saucepan using the AWS CLI command. Suppose nosotros have a single file to upload. The file is stored locally in the C:\S3Files with the proper noun script1.txt.

To upload the single file, utilise the following CLI script.

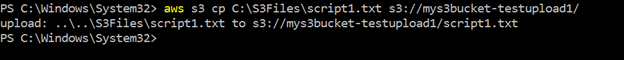

>aws s3 cp C:\S3Files\Script1.txt s3://mys3bucket-testupload1/ It uploads the file and returns the source-destination file paths in the output:

Notation: The time to upload on the S3 bucket depends on the file size and the network bandwidth. For the demo purpose, I used a small file of a few KBs.

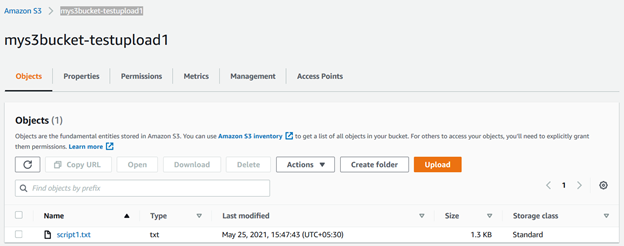

Y'all can refresh the s3 bucket [mys3bucket-testupload1] and view the file stored in it.

Similarly, we can use the aforementioned CLI script with a slight modification. It uploads all files from the source to the destination S3 bucket. Hither, nosotros utilize the parameter –recursive for uploading multiple files together:

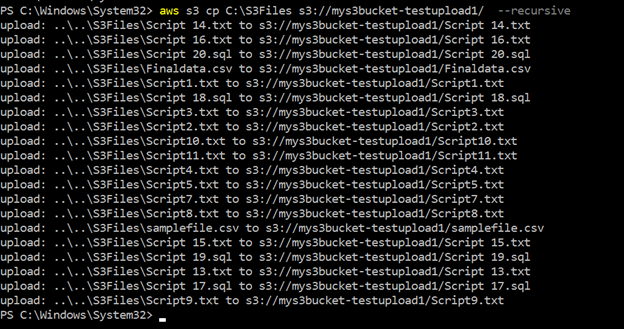

>aws s3 cp c:\s3files s3://mys3bucket-testupload1/ --recursive As shown below, it uploads all files stored within the local directory c:\S3Files to the S3 saucepan. You become the progress of each upload in the panel.

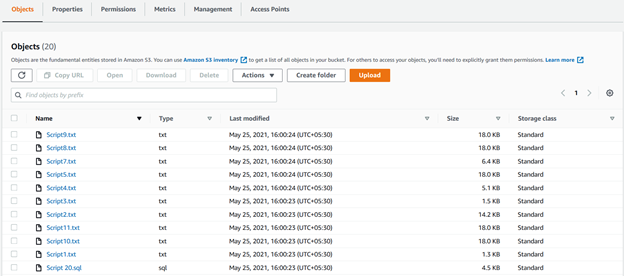

We can see all uploaded files using recursive parameters in the S3 bucket in the following figure:

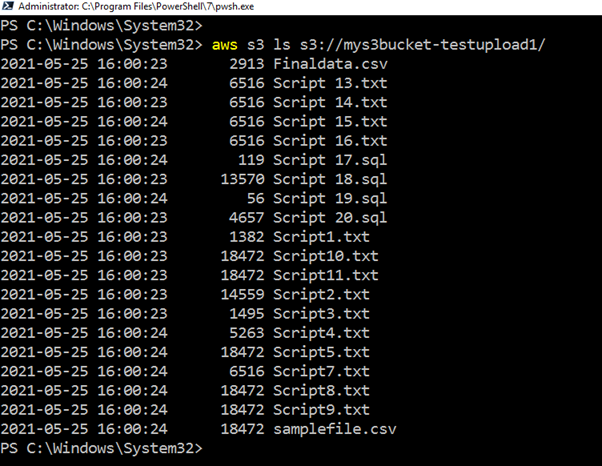

If you do not want to go to the AWS portal to verify the uploaded listing, run the CLI script, return all files, and upload timestamps.

>aws s3 ls s3://mys3bucket-testupload1

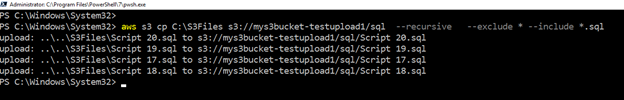

Suppose we desire to upload only files with a specific extension into the separate folder of AWS S3. You lot can do the object filtering using the CLI script as well. For this purpose, the script uses include and exclude keywords.

For instance, the query below checks files in the source directory (c:\s3bucket), filters files with .sql extension, and uploads them into SQL/ folder of the S3 bucket. Here, we specified the extension using the include keyword:

>aws s3 cp C:\S3Files s3://mys3bucket-testupload1/ --recursive --exclude * --include *.sql In the script output, y'all can verify that files with the .sql extensions just were uploaded.

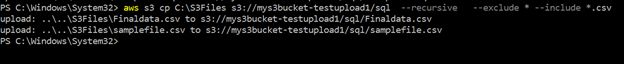

Similarly, the below script uploads files with the .csv extension into the S3 bucket.

>aws s3 cp C:\S3Files s3://mys3bucket-testupload1/ --recursive --exclude * --include *.csv

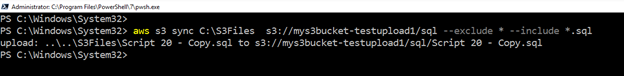

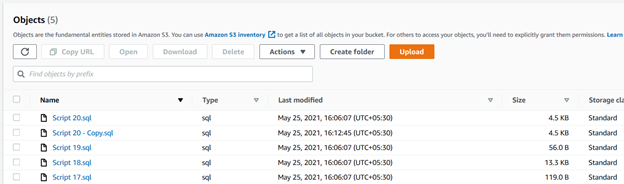

Upload New or Modified Files from Source Folder to S3 Bucket

Suppose you use an S3 bucket to motion your database transaction log backups.

For this purpose, we utilize the sync keyword. It recursively copies new, modified files from the source directory to the destination s3 bucket.

>aws s3 sync C:\S3Files s3://mys3bucket-testupload1/ --recursive --exclude * --include *.sql As shown below, it uploaded a file that was absent in the s3 bucket. Similarly, if you modify any existing file in the source folder, the CLI script will choice it and upload it to the S3 saucepan.

Summary

The AWS CLI script tin make your piece of work easier for storing files in the S3 saucepan. Yous tin use it to upload or synchronize files betwixt local folders and the S3 saucepan. It is a quick mode to deploy and work with objects in the AWS deject.

Tags: AWS, aws cli, aws s3, cloud platform Last modified: September 16, 2021

villarrealjunashe1984.blogspot.com

Source: https://codingsight.com/upload-files-to-aws-s3-with-the-aws-cli/

0 Response to "How to Upload to an S3 Bucket"

Post a Comment